The most recent Docker State of Application Development Survey results offer insights into how developers are adopting and utilizing AI, reflecting a shift toward more intelligent, efficient, and adaptable development methodologies. This transformation is part of a larger trend observed across the tech industry as AI becomes increasingly central to software development.

The annual Docker State of Application Development survey, conducted by our User Research Team, is one way Docker product managers, engineers, and designers gather insights from Docker users to continuously develop and improve the suite of tools the company offers. For example, in Docker’s 2022 State of Application Development Survey, we found that the task for which Docker users most often refer to support/documentation was creating a Dockerfile (reported by 60% of respondents). This finding helped spur the innovation of Docker AI.

More than 1,300 developers participated in the latest Docker State of Application Development survey, conducted in late 2023. The online survey asked respondents about what tools they use, their application development processes and frustrations, feelings about industry trends, Docker usage, and participation in developer communities. We wanted to know where developers are focused, what they’re working on, and what is most important to them.

Of the approximately 1,300 respondents to the survey, 885 completed it. The findings in this report are based on the 885 completed responses.

Who responded to the Docker survey?

Respondents who took our survey ranged from home hobbyists to professionals at companies with more than 5,000 employees. Forty-two percent of respondents are working for a small company (up to 100 employees), 28% of participants say they work for mid-sized companies (between 100 and 1,000 employees), and 25% work for large companies (more than 1,000 employees).

Well over half of the respondents were in engineering roles — for example, 36% of respondents identified as back-end or full-stack developers; 21% were DevOps, infrastructure managers, or platform engineers; and 4% were front-end developers. Other roles of respondents included dev/engineering managers, company leadership, product managers, security roles, and AI/ML roles. There was nearly an even split between respondents with more experience (6+ years, 54%) and less experienced (0-5 years, 46%).

Our survey underscored a marked growth in roles focused on machine learning (ML) engineering and data science within the Docker ecosystem. In our 2022 survey, approximately 1% of respondents represented this demographic, whereas they made up 8% in the most recent survey. ML engineers and data scientists represent a rapidly expanding user base. This signals the growing relevance of AI to the software development field, and the blurring of the lines between tools used by developers and tools used by AI/ML scientists.

More than 34% of respondents said they work in the computing or IT/SaaS industry, but we also saw responses from individuals working in accounting, banking, or finance (8%); business, consultancy, or management (7%); engineering or manufacturing (6%), and education (5%). Other responses came in from professionals in a wide range of fields, including media; academic research; transport or logistics; retail; marketing, advertising, or PR; charity or volunteer work; healthcare; construction; creative arts or design; and environment or agriculture.

Docker users made up 87% of our respondents, whereas 13% reported that they do not use Docker.

AI as an up-and-coming trend

We asked participants what they felt were the most important trends currently in the industry. GenAI (40% of respondents) and AI assistants for software engineering (38% of respondents) were the top-selected options identified as important industry trends in software development. More senior developers (back-end, front-end, and full-stack developers with over 5 years of experience) tended to view GenAI as most important, whereas more junior developers (less than 5 years of experience) view AI assistants for software engineering as most important. This difference may signal varied and unique uses of AI throughout a career in software development.

It’s clearly trendy, but how do developers really feel about AI? The majority (65%) agree that AI is a positive option, it makes their jobs easier (61%), and it allows them to focus on more important tasks (55%). A much smaller number of respondents see AI as a threat to their jobs (23%) or say it makes their jobs more difficult (19%).

Interestingly, despite high usage and generally positive feelings towards AI, 45% of respondents also reported that they feel AI is over-hyped. Why might this be? It’s not fully clear, but when this finding is considered alongside responses to perception of job threat, one possible answer could be entertained: respondents may be viewing AI as a critical and useful tool for their work, but they’re not too worried about the hype of it replacing them anytime soon.

How AI is used in the developer’s world

We asked users what they use AI for, how dependent they feel on AI, and what AI tools they use most often. A majority of developers (64%) already report using AI for work, underscoring AI’s penetration into the software development field. Developers leverage AI at work mainly for coding (33% of respondents), writing documentation (29%), research (28%), writing tests (23%), troubleshooting/debugging (21%), and CLI commands (20%).

For the 568 respondents who indicated they use AI for work, we also asked how dependent they felt on AI to get their job done on a scale of 0 (not at all dependent) to 10 (completely dependent). Responses ranged substantially and varied by role and years of experience, but the overall average reported dependence was about 4 out of 10, indicating relatively low dependence.

In the developer toolkit, respondents indicate that AI tools like ChatGPT (46% of respondents), GitHub Copilot (30%), and Bard (19%) stand out as most frequently used.

Conclusion

Concluding our 2024 Docker AI Trends Report, Artificial Intelligence is already shifting the way software development is approached. The insights from more than 800 respondents in our latest survey illuminate a path toward a future where AI is seamlessly integrated into every aspect of application development. From coding and documentation to debugging and writing tests, AI tools are becoming indispensable in enhancing efficiency and problem-solving capabilities, allowing developers to focus on more creative and important work.

The uptake of AI tools such as ChatGPT, GitHub Copilot, and Bard among developers is a testament to AI’s value in the development process. Moreover, the growing interest in machine learning engineering and data science within the Docker community signals a broader acceptance and integration of AI technologies.

As Docker continues to innovate and support developers in navigating these changes, the evolving landscape of AI in software development presents both opportunities and challenges. Embracing AI as a positive force that can augment human capabilities rather than replace them is crucial. Docker is committed to facilitating this transition by providing tools and resources that empower developers to leverage AI effectively, ensuring they can remain at the forefront of technological innovation.

Looking ahead, Docker will continue to monitor these trends, adapt our offerings accordingly, and support our user community in harnessing the full potential of AI in software development. As the industry evolves, so too will Docker’s role in shaping the future of application development, ensuring our users are equipped to meet the challenges and seize the opportunities that lie ahead in this exciting era of AI-driven development.

Learn more

- Introducing a New GenAI Stack: Streamlined AI/ML Integration Made Easy

- Get started with the GenAI Stack: Langchain + Docker + Neo4j + Ollama

- Building a Video Analysis and Transcription Chatbot with the GenAI Stack

- Docker Partners with NVIDIA to Support Building and Running AI/ML Applications

- Build Multimodal GenAI Apps with OctoAI and Docker

- How IKEA Retail Standardizes Docker Images for Efficient Machine Learning Model Deployment

- Case Study: How Docker Accelerates ZEISS Microscopy’s AI Journey

- Docker AI: From Prototype to CI/CD Pipeline solutions brief

- Containerize a GenAI app (use case guide)

Docker’s User Research Team — Olga Diachkova, Julia Wilson, and Rebecca Floyd — conducted this survey, analyzed the results, and provided insights.

For a complete methodology, contact uxresearch@docker.com.

]]>And the world of application software development changed forever.

Docker was built on the shoulders of giants of the Linux kernel, copy-on-write file systems, and developer-friendly git semantics. The result? Docker has fundamentally transformed how developers build, share, and run applications. By “dockerizing” an app and its dependencies into a standardized, open format, Docker dramatically lowered the friction between devs and ops, enabling devs to focus on their apps — what’s inside the container — and ops to focus on deploying any app, anywhere — what’s outside the container, in a standardized format. Furthermore, this standardized “unit of work” that abstracts the app from the underlying infrastructure enables an “inner loop” for developers of code, build, test, verify, and debug, which results in 13X more frequent releases of higher-quality, more secure updates.

The subsequent energy over the past 11 years from the ecosystem of developers, community, open source maintainers, partners, and customers cannot be understated, and we are so thankful and appreciative of your support. This has shown up in many ways, including the following:

- Ranked #1 “most-wanted” tool/platform by Stack Overflow’s developer community for the past four years

- 26 million monthly active IPs accessing 15 million repos on Docker Hub, pulling them 25 billion times per month

- 17 million registered developers

- Moby project has 67.5k stars, 18.5k forks, and more than 2,200 contributors; Docker Compose has 32.1k stars and 5k forks

- A vibrant network of 70 Docker Captains across 25 countries serving 167 community meetup groups with more than 200k members and 4800 total meetups

- 79,000+ customers

The next decade

In our first decade, we changed how developers build, share, and run any app, anywhere — and we’re only accelerating in our second!

Specifically, you’ll see us double down on meeting development teams where they are to enable them to rapidly ship high-quality, secure apps via the following focus areas:

- Dev Team Productivity. First, we’ll continue to help teams take advantage of the right tech for the right job — whether that’s Linux containers, Windows containers, serverless functions, and/or Wasm (Web Assembly) — with the tools they love and the skills they already possess. Second, by bringing together the best of local and the best of cloud, we’ll enable teams to discover and address issues even faster in the “inner loop,” as you’re already seeing today with our early efforts with Docker Scout, Docker Build Cloud, and Testcontainers Cloud.

- GenAI. This tech is ushering in a “golden age” for development teams, and we’re investing to help in two areas: First, our GenAI Stack — built through collaboration with partners Ollama, LangChain, and Neo4j — enables dev teams to quickly stand up secure, local GenAI-powered apps. Second, our Docker AI is uniquely informed by anonymized data from dev teams using Docker, which enables us to deliver automations that eliminate toil and reduce security risks.

- Software Supply Chain. The software supply chain is heterogeneous, extensive, and complex for dev teams to navigate and secure, and Docker will continue to help simplify, make more visible, and manage it end-to-end. Whether it’s the trusted content “building blocks” of Docker Official Images (DOI) in Docker Hub, the transformation of ingredients into runnable images via BuildKit, verifying and securing the dev environment with digital signatures and enhanced container isolation, consuming metadata feedback from running containers in production, or making the entire end-to-end supply chain visible and issues actionable in Docker Scout, Docker has it covered and helps make a more secure internet!

Dialing it past 11

While our first decade was fantastic, there’s so much more we can do together as a community to serve app development teams, and we couldn’t be more excited as our second decade together gets underway and we dial it past 11! If you haven’t already, won’t you join us today?!

How has Docker influenced your approach to software development? Share your experiences with the community and join the conversation on LinkedIn.

Let’s build, share, and run — together!

]]>

Meet us at our KubeCon booth, sessions, and events to learn about the latest trends in AI productivity and best practices in cloud-native development with Docker. At our KubeCon booth (#J3), we’ll show you how building in the cloud accelerates development and simplifies multi-platform builds with a side-by-side demo of Docker Build Cloud. Learn how Docker and Testcontainers Cloud provide a seamless integration within the testing framework to improve the quality and speed of application delivery.

It’s not all work, though — join us at the booth for our Megennis Motorsport Racing experience and try to beat the best!

Take advantage of this opportunity to connect with the Docker team, learn from the experts, and contribute to the ever-evolving cloud-native landscape. Let’s shape the future of cloud-native technologies together at KubeCon!

Deep dive sessions from Docker experts

Is Your Image Really Distroless? — Docker software engineer Laurent Goderre will dive into the world of “distroless” Docker images on Wednesday, March 20. In this session, Goderre will explain the significance of separating build-time and run-time dependencies to enhance container security and reduce vulnerabilities. He’ll also explore strategies for configuring runtime environments without compromising security or functionality. Don’t miss this must-attend session for KubeCon attendees keen on fortifying their Docker containers.

Simplified Inner and Outer Cloud Native Developer Loops — Docker Staff Community Relations Manager Oleg Šelajev and Diagrid Customer Success Engineer Alice Gibbons tackle the challenges of developer productivity in cloud-native development. On Wednesday, March 20, they will present tools and practices to bridge the gap between development and production environments, demonstrating how a unified approach can streamline workflows and boost efficiency across the board.

Engage, learn, and network at these events

Security Soiree: Hands-on cloud-native security workshop and party — Join Sysdig, Snyk, and Docker on March 19 for cocktails, team photos, music, prizes, and more at the Security Soiree. Listen to a compelling panel discussion led by industry experts, including Docker’s Director of Security, Risk & Trust, Rachel Taylor, followed by an evening of networking and festivities. Get tickets to secure your invitation.

Docker Meetup at KubeCon: Development & data productivity in the age of AI — Join us at our meetup during KubeCon on March 21 and hear insights from Docker, Pulumi, Tailscale, and New Relic. This networking mixer at Tonton Becton Restaurant promises candid discussions on enhancing developer productivity with the latest AI and data technologies. Reserve your spot now for an evening of casual conversation, drinks, and delicious appetizers.

See you March 19 – 22 at KubeCon + CloudNativeCon Europe

We look forward to seeing you in Paris — safe travels and prepare for an unforgettable experience!

Learn more

- New to Docker? Create an account.

- Learn about Docker Build Cloud.

- Subscribe to the Docker Newsletter.

- Read about what rolled out in Docker Desktop 4.27, including synchronized file shares, Docker Init GA, a private marketplace for extensions, Moby 25, support for Testcontainers with ECI, Docker Build Cloud, and Docker Debug Beta.

Along with our investments in bringing access to cloud resources within the local Docker Desktop experience with Docker Build Cloud Builds view, this release provides a more efficient and flexible platform for development teams.

Introducing enhanced file-sharing controls in Docker Desktop Business

As we continue to innovate and elevate the Docker experience for our business customers, we’re thrilled to unveil significant upgrades to the Docker Desktop’s Hardened Desktop feature. Recognizing the importance of administrative control over Docker Desktop settings, we’ve listened to your feedback and are introducing enhancements prioritizing security and ease of use.

For IT administrators and non-admin users, Docker now offers the much-requested capability to specify and manage file-sharing options directly via Settings Management (Figure 1). This includes:

- Selective file sharing: Choose your preferred file-sharing implementation directly from Settings > General, where you can choose between VirtioFS, gRPC FUSE, or osxfs. VirtioFS is only available for macOS versions 12.5 and above and is turned on by default.

- Path allow-listing: Precisely control which paths users can share files from, enhancing security and compliance across your organization.

We’ve also reimagined the Settings > Resources > File Sharing interface to enhance your interaction with Docker Desktop (Figure 2). You’ll notice:

- Clearer error messaging: Quickly understand and rectify issues with enhanced error messages.

- Intuitive action buttons: Experience a smoother workflow with redesigned action buttons, making your Docker Desktop interactions as straightforward as possible.

These enhancements are not just about improving current functionalities; they’re about unlocking new possibilities for your Docker experience. From increased security controls to a more navigable interface, every update is designed with your efficiency in mind.

Refining development with Docker Desktop’s Builds view update

Docker Desktop’s previous update introduced Docker Build Cloud integration, aimed at reducing build times and improving build management. In this release, we’re landing incremental updates that refine the Builds view, making it easier and faster to manage your builds.

New in Docker Desktop 4.28:

- Dedicated tabs: Separates active from completed builds for better organization (Figure 3).

- Build insights: Displays build duration and cache steps, offering more clarity on the build process.

- Reliability fixes: Resolves issues with updates for a more consistent experience.

- UI improvements: Updates the empty state view for a clearer dashboard experience (Figure 4).

These updates are designed to streamline the build management process within Docker Desktop, leveraging Docker Build Cloud for more efficient builds.

To explore how Docker Desktop and Docker Build Cloud can optimize your development workflow, read our Docker Build Cloud blog post. Experience the latest Builds view update to further enrich your local, hybrid, and cloud-native development journey.

These Docker Desktop updates support improved platform security and a better user experience. By introducing more detailed file-sharing controls, we aim to provide developers with a more straightforward administration experience and secure environment. As we move forward, we remain dedicated to refining Docker Desktop to meet the evolving needs of our users and organizations, enhancing their development workflows and agility to innovate.

Join the conversation and make your mark

Dive into the dialogue and contribute to the evolution of Docker Desktop. Use our feedback form to share your thoughts and let us know how to improve the Hardened Desktop features. Your input directly influences the development roadmap, ensuring Docker Desktop meets and exceeds our community and customers’ needs.

Learn more

- Authenticate and update to receive the newest Docker Desktop features per your subscription level.

- New to Docker? Create an account.

- Read our latest blog on synchronized file shares.

- Read about what rolled out in Docker Desktop 4.27, including synchronized file shares, Docker Init GA, a private marketplace for extensions, Moby 25, support for Testcontainers with ECI, Docker Build Cloud, and Docker Debug Beta.

- Learn about Docker Build Cloud.

- Subscribe to the Docker Newsletter.

This strategic move opens a new chapter for Docker and our customers, who will benefit from an integrated go-to-market approach between Docker and AWS.

Advantages of embracing the AWS ISV Accelerate Program include:

Strategic collaboration

The program fosters a deeper collaboration between Docker and AWS, creating a unique platform for both parties to work closely with AWS experts. This collaboration is pivotal for optimizing Docker’s solutions for AWS services, ensuring deployment and an enhanced onboarding experience for customers.

Market expansion

Leveraging existing agreements with AWS, customers can easily procure Docker’s offerings, capitalizing on benefits like AWS Marketplace Enterprise Discounts (EDP) and simplified procurement processes. With both go-to-market teams working closely together, customers will benefit from more tailored solutions.

Technical enablement

The AWS ISV Accelerate Program provides Docker with a wealth of technical resources and guidance, empowering us to enhance product offerings tailored for AWS customers. From comprehensive training on AWS services to best practices and meticulous architectural reviews, Docker is well-equipped to ensure our solutions are compatible and optimized for the cloud.

Conclusion

Docker’s entry into the AWS ISV Accelerate Program marks a significant milestone in our collaborative journey with AWS. The program’s robust support structure, coupled with AWS’s unparalleled global reach, propels Docker into a position where we are accelerating our growth and actively supporting our customers in delivering an exceptional developer experience.

If you are a customer eager to explore the benefits of this dynamic partnership, we encourage you to reach out to your dedicated Docker Account Executive or contact sales. Unleash the power of this collaboration and deliver great developer experiences to your teams.

Learn more

- Get the latest release of Docker Desktop.

- Vote on what’s next! Check out our public roadmap.

- Have questions? The Docker community is here to help.

- New to Docker? Get started.

I’m thrilled to announce that Docker is whale-coming AtomicJar, the makers of Testcontainers, to the Docker family. With its support for Java, .NET, Go, Node.js, and six other programming languages, together with its container-based testing automation, Testcontainers has become the de facto standard test framework for the developer’s ”inner loop.” Why? The results speak for themselves — Testcontainers enables step-function improvements in both the quality and speed of application delivery.

This addition continues Docker’s focus on improving the developer experience to maximize the time developers spend building innovative apps. Docker already accelerates the “inner loop” app development steps — build, verify (through Docker Scout), run, debug, and share — and now, with AtomicJar and Testcontainers, we’re adding “test.” As a result, developers using Docker will be able to deliver quality applications with less effort, even faster than before.

Testcontainers itself is a great open source success story in the developer tools ecosystem. Last year, Testcontainers saw a 100% increase in Docker Hub pulls, from 50 million to 100 million, making it one of the fastest-growing Docker Hub projects. Furthermore, Testcontainers has transformed testing at organizations like DoorDash, Netflix, Spotify, and Uber and thousands more.

One of the more exciting things about whale-coming AtomicJar is the bringing together our open source communities. Specifically, the Testcontainers community has deep roots in the programming language communities above. We look forward to continuing to support the Testcontainers open source project and look forward to what our teams do to expand it further.

Please join me in whale-coming AtomicJar and Testcontainers to Docker!

sj

FAQ | Docker Acquisition of AtomicJar

With Docker’s acquisition of AtomicJar and associated Testcontainers projects, you’re sure to have questions. We’ve answered the most common ones in this FAQ.

As with all of our open source efforts, Docker strives to do right by the community. We want this acquisition to benefit everyone — community and customer — in keeping with our developer obsession.

What will happen to Testcontainers Cloud customers?

Customers of AtomicJar’s paid offering, Testcontainers Cloud, will continue while we work to develop new and better integration options. Existing Testcontainers Cloud subscribers will see an update to the supplier on their invoices, but no other billing changes will occur.

Will Testcontainers become closed-source?

There are no plans to change the licensing structure of Testcontainers’s open source components. Docker has always valued the contributions of open source communities.

Will Testcontainers or its companion projects be discontinued?

There are no plans to discontinue any Testcontainers projects.

Will people still be able to contribute to Testcontainers’s open source projects?

Yes! Testcontainers has always benefited from outside collaboration in the form of feedback, discussion, and code contributions, and there’s no desire to change that relationship. For more information about how to participate in Testcontainers’s development, see the contributing guidelines for Java, Go, and .NET.

What about other downstream users, companies, and projects using Testcontainers?

Testcontainers’ open source licenses will continue to allow the embedding and use of Testcontainers by other projects, products, and tooling.

Who will provide support for Testcontainers projects and products?

In the short term, support for Testcontainers’s projects and products will continue to be provided through the existing support channels. We will work to merge support into Docker’s channels in the near future.

How can I get started with Testcontainers?

To get started with Testcontainers follow this guide or one of the guides for a language of your choice:

“Docker is more than a container tool. It comprises multiple developer tools that have become the industry standard for self-service developer platforms, empowering teams to be more efficient, secure, and collaborative,” says Docker CEO Scott Johnston. “Bringing Mutagen into the Docker family is another example of how we continuously evolve our offering to meet the needs of developers with a product that works seamlessly and improves the way developers work.”

The Mutagen acquisition introduces novel mechanisms for developers to extract the highest level of performance from their local hardware while simultaneously opening the gateway to the newest remote development solutions. We continue scaling the abilities of Docker Desktop to meet the needs of the growing number of developers, businesses, and enterprises relying on the platform.

“Docker Desktop is focused on equipping every developer and dev team with blazing-fast tools to accelerate app creation and iteration by harnessing the combined might of local and cloud resources. By seamlessly integrating and magnifying Mutagen’s capabilities within our platform, we will provide our users and customers with unrivaled flexibility and an extraordinary opportunity to innovate rapidly,” says Webb Stevens, General Manager, Docker Desktop.

“There are so many captivating integration and experimentation opportunities that were previously inaccessible as a third-party offering,” says Jacob Howard, the CEO at Mutagen. “As Mutagen’s lead developer and a Docker Captain, my ultimate goal has always been to enhance the development experience for Docker users. As an integral part of Docker’s technology landscape, Mutagen is now in a privileged position to achieve that goal.”

Jacob will join Docker’s engineering team, spearheading the integration of Mutagen’s technologies into Docker Desktop and other Docker products.

You can get started with Mutagen today by downloading the latest version of Docker Desktop and installing the Mutagen extension, available in the Docker Extensions Marketplace. Support for current Mutagen offerings, open source and paid, will continue as we develop new and better integration options.

FAQ | Docker Acquisition of Mutagen

With Docker’s acquisition of Mutagen, you’re sure to have questions. We’ve answered the most common ones in this FAQ.

As with all of our open source efforts, Docker strives to do right by the community. We want this acquisition to benefit everyone — community and customer — in keeping with our developer obsession.

What will happen to Mutagen Pro subscriptions and the Mutagen Extension for Docker Desktop?

Both will continue as we evaluate and develop new and better integration options. Existing Mutagen Pro subscribers will see an update to the supplier on their invoices, but no other billing changes will occur.

Will Mutagen become closed-source?

There are no plans to change the licensing structure of Mutagen’s open source components. Docker has always valued the contributions of open source communities.

Will Mutagen or its companion projects be discontinued?

There are no plans to discontinue any Mutagen projects.

Will people still be able to contribute to Mutagen’s open source projects?

Yes! Mutagen has always benefited from outside collaboration in the form of feedback, discussion, and code contributions, and there’s no desire to change that relationship. For more information about how to participate in Mutagen’s development, see the contributing guidelines.

What about other downstream users, companies, and projects using Mutagen?

Mutagen’s open source licenses continue to allow the embedding and use of Mutagen by other projects, products, and tooling.

Who will provide support for Mutagen projects and products?

In the short term, support for Mutagen’s projects and products will continue to be provided through the existing support channels. We will work to merge support into Docker’s channels in the near future.

Is this replacing Virtiofs, gRPC-FUSE, or osxfs?

No, virtual filesystems will continue to be the default path for bind mounts in Docker Desktop. Docker is continuing to invest in the performance of these technologies.

How does Mutagen compare with other virtual or remote filesystems?

Mutagen is a synchronization engine rather than a virtual or remote filesystem. Mutagen can be used to synchronize files to native filesystems, such as ext4, trading typically imperceptible amounts of latency for full native filesystem performance.

How does Mutagen compare with other synchronization solutions?

Mutagen focuses primarily on configuration and functionality relevant to developers.

How can I get started with Mutagen?

To get started with Mutagen, download the latest version of Docker Desktop and install the Mutagen Extension from the Docker Desktop Extensions Marketplace.

]]>

CNAs, or CVE Numbering Authorities, are an essential part of vulnerability reporting because they compose a cohort of bug bounty programs, organizations, and companies involved in the secure software supply chain. When millions of developers depend on your projects, like in Docker’s case, it’s important to be a CNA to reinforce your commitment to cybersecurity and good stewardship as part of the software supply chain.

Previously, Docker reported CVEs directly through MITRE and GitHub without CNA status (there are many other organizations that still do this today, and CVE reporting does not require CNA status).

But not anymore! Docker is now officially a CNA under MITRE, which means you should get better notifications and documentation when we publish a vulnerability.

What are CNAs? (And where does MITRE fit in?)

To understand how CNAs, CVEs, and MITRE fit together, let’s start with the reason behind all those acronyms. Namely, a vulnerability.

When a vulnerability pops up, it’s really important that it has a unique identifier so developers know they’re all talking about the same vulnerability. (Let’s be honest, calling it, “That Java bug” really isn’t going to cut it.)

So someone has to give it a CVE (Common Vulnerabilities and Exposures) designation. That’s where a CNA comes in. They submit a request to their root CNA, which is often MITRE (and no, MITRE isn’t an acronym). A new CVE number, or several, is then assigned depending on how the report is categorized, thus making it official. And to keep all the CNAs on the same page, there are companies that maintain the CVE system.

MITRE is a non-profit corporation that maintains the system with sponsorship from the US government’s CISA (Cybersecurity and Infrastructure Security Agency). Like CISA, MITRE helps lead the charge in protecting public interest when it comes to defense, cybersecurity, and a myriad of other industries.

The CVE system provides references and information about the scary-ickies or the ultra terrifying vulnerabilities found in the world of technology, making vulnerabilities for shared resources and technologies easy to publicize, notify folks about, and take action against.

If you feel like learning more about the CVE program check out MITRE’s suite of videos here or the program’s homepage.

Where does Docker fit in?

Docker has reported CVEs in the past directly through MITRE and has, for example, used the reporting functionality through GitHub on Docker Engine. By becoming a CNA, however, we can take a more direct and coordinated approach with our reporting.

And better reporting means better awareness for everyone using our tools!

Docker went through the process of becoming a CNA (including some training and homework) so we can more effectively report on vulnerabilities related to Docker Desktop and Docker Hub. The checklist for CNA status also includes having appropriate disclosure and advisory policies in place. Docker’s status as a CNA means we can centralize CVE reporting for our different offerings connected to Docker Desktop, as well as those connected to Docker Hub and the registry.

By becoming a CNA, Docker can be more active in the community of companies that make up the software supply chain. MITRE, as the default CNA and one of the root CNAs (CISA is a root CNA too), acts as the unbiased reviewer of vulnerability reports. Other organizations, vendors, or bug bounty programs, like Microsoft, HashiCorp, Rapid7, VMware, Red Hat, and hundreds of others, also act as CNAs.

Keep in mind that Docker’s status as a CNA means we’ll only report for products and projects we maintain. Being a CNA also includes consideration of when certain products might be end-of-life and how that affects CVE assignment.

Ch-ch-changes?

Will the experience of using Docker Hub and Docker Desktop because of Docker’s new CNA status? Short answer: no. Long answer: the core experience of using Docker will not change. We’ve just leveled up in tackling vulnerabilities and providing better notifications about those vulnerabilities.

By better notifications, we mean a centralized repository for our security advisories. Because these reported vulnerabilities will link back to MITRE’s CVE program, it makes them far easier to search for, track, and tell your friends, your dog, or your cat about.

To see the latest vulnerabilities as Docker reports them and CVEs become assigned, check out our advisory location here: https://docs.docker.com/security/. For historic advisories also check https://docs.docker.com/desktop/release-notes/ and https://docs.docker.com/engine/release-notes/.

Keep in mind that CVEs that get reported are those that affect the consumers of Docker’s toolset and will require remediation from us and potential upgrade actions from the user, just like any other CVE announcement you might have seen in the news recently.

So keep your fins ready for when CVEs we may announce might apply to you.

Help Docker help you

We still encourage users and security researchers to report anything concerning they encounter with their use of Docker Hub and/or Docker Desktop to security@docker.com. (For reference, our security and privacy guidelines can be found here.)

We also still encourage proper configuration according to Docker documentation and to not to do anything Moby wouldn’t do. (That means you should be whale-intentioned in your builds and help your fin-ends and family using Docker configure it properly.)

And while we can’t promise to stop using whale puns any time soon, we can promise to continue to be good stewards for developers — and a big part of that includes proper security procedures.

]]>

Rapid7 is a Boston-based provider of security analytics and automation solutions enabling organizations to implement an active approach to cybersecurity. Over 10,000 customers rely on Rapid7 technology, services, and research to improve security outcomes and securely advance their organizations.

The security space is constantly changing, with new threats arising every day. To meet their customers’ needs, Rapid7 focuses on increasing the reliability and velocity of software builds while also maintaining their quality.

That’s why Rapid7 turned to Docker. Their teams use Docker to help development, support the sales pipeline, provide testing environments, and deploy to production in an automated, reliable way.

By using Docker, Rapid7 transformed their onboarding process by automating manual processes. Setting up a new development environment now takes minutes instead of days. Their developers can produce faster builds that enable regular code releases to support changing requirements.

Automating the onboarding process

When developers first joined Rapid7, they were met with a static, manual process that was time consuming and error-prone. Configuring a development environment isn’t exciting for most developers. They want to spend most of their time creating! And setting up the environment is the least glamorous part of the process.

Docker helped automate this cumbersome process. Using Docker, Rapid7 could create containerized systems that were preconfigured with the right OS and developer tools. Docker Compose enabled multiple containers to communicate with each other, and it had the hooks needed to incorporate custom scripting and debugging tools.

Once the onboarding setup was configured through Docker, the process was simple for other developers to replicate. What once took multiple days now takes minutes.

Expanding containers into production

The Rapid7 team streamlined the setup of the development environment by using a Dockerfile. This helped them create an image with every required dependency and software package.

But they didn’t stop there. As this single Docker image evolved into a more complex system, they realized that they’d need more Docker images and container orchestration. That’s when they integrated Docker Compose into the setup.

Docker Compose simplified Docker image builds for each of Rapid7’s environments. It also encouraged a high level of service separation that split out different initialization steps into separate bounded contexts. Plus, they could leverage Docker Compose for inter-container communication, private networks, Docker volumes, defining environment variables with anchors, and linking containers for communication and aliasing.

This was a real game changer for Rapid7, because Docker Compose truly gave them unprecedented flexibility. Teams then added scripting to orchestrate communication between containers when a trigger event occurs (like when a service has completed).

Using Docker, Docker Compose, and scripting, Rapid7 was able to create a solution for the development team that could reliably replicate a complete development environment. To optimize the initialization, Rapid7 wanted to decrease the startup times beyond what Docker enables out of the box.

Optimizing build times even further

After creating Docker base images, the bottom layers rarely have to change. Essentially, that initial build is a one-time cost. Even if the images change, the cached layers make it a breeze to get through that process quickly. However, you do have to reinstall all software dependencies from scratch again, which is a one-time cost per Docker image update.

Committing the installed software dependencies back to the base image allows for a simple, incremental, and often skippable stage. The Docker image is always usable in development and production, all on the development computer.

All of these efficiencies together streamlined an already fast 15 minute process down to 5 minutes — making it easy for developers to get productive faster.

How to build it for yourself

Check out code examples and explanations about how to replicate this setup for yourself. We’ll now tackle the key steps you’ll need to follow to get started.

Downloading Docker

Download and install the latest version of Docker to be able to perform Docker-in-Docker. Docker-in-Docker lets your Docker environment have Docker installed within a container. This lets your container run other containers or pull images.

To enable Docker-in-Docker, you can apt install the docker.io distribution as one of your first commands in your Dockerfile. Once the container is configured, mount the Docker socket from the host installation:

# Dockerfile

FROM ubuntu:20.04

# Install dependencies

RUN apt update && \

apt install -y docker.io

Next, build your Docker image by running the following command in your CLI or shell script file:

docker build -t <docker-image-name>

Then, start your Docker container with the following command:

docker run -v /var/run/docker.sock:/var/run/docker.sock -ti <docker-image-name>

Using a Docker commit script

Committing layered changes to your base image is what drives the core of the Dev Environments in Docker. Docker fetches the container ID based on the service name, and the changes you make to the running container are committed to the desired image.

Because the host Docker socket is mounted into the container when executing the docker commit command, the container will apply the change to the base image located in the host Docker installation.

# ! /bin/bash

SERVICE=${1}

IMAGE=${2}

# Commit changes to image

CONTAINER_ID=$(docker ps -aqf “name=${SERVICE}”)

if [ ! -z “$CONTAINER_ID”]; then

Echo “--- Committing changes from $SERVICE to $IMAGE --- ”

docker commit $CONTAINER_ID $IMAGE

fi

Updating your environment

Mount the Docker socket from the host installation. Mounting the source code is insufficient without the :z property, which tells Docker that the content will be shared between containers.

You’ll have to mount the host machine’s Docker socket into the container. This lets any Docker operations performed inside the container actually modify the host Docker images and installation. Without this, changes made in the container are only going to persist in the container until it’s stopped and removed.

Add the following code into your Docker Compose file:

# docker-compose.yaml

services:

service-name:

image: image-with-docker:latest

volumes:

- /host/code/path:/container/code/path:z

- /var/run/docker.sock:/var/run/docker.sock

Orchestrating components

Once Docker Compose has the appropriate services configured, you can start your environment in two different ways. Use either the docker-compose up command or start the environment by running the individual service with the linked services with the following command:

docker compose start webserver

The main container references the linked service via the linked names. This makes it very easy to override any environment variables with the provided names. Check out the YAML file below:

services:

webserver:

mysql:

ports:

- '3306:3306'

volume

- dbdata:var/lib/mysql

redis:

ports:

- 6379:6379

volumes:

- redisdata:/data

volumes:

dbdata:

redisdata:

Notes: For each service, you’ll want to choose and specify your preferred Docker Official Image version. Additionally, the MySQL Docker Official Image comes with important environment variables defaulted in — though you can specify them here as needed.

Managing separate services

Starting a small part of the stack can also be useful if a developer only needs that specific piece. For example, if we just wanted to start the MySQL service, we’d run the following command:

docker compose start mysql

We can stop this service just as easily with the following command:

docker compose stop mysql

Configuring your environment

Mounting volumes into the database services lets your containers apply the change to their respective databases while letting those databases remain as ephemeral containers.

In the main entry point and script orchestrator, provide a -p attribute to ./start.sh to set the PROD_BUILD environment variable. The build reads the variable inside the entry point and optionally builds a production or development version of the development environment.

First, here’s how that script looks:

# start.sh

while [ "$1" != ""];

do

case $1 in

-p | --prod) PROD_BUILD="true";;

esac

shift

done

Second, here’s a sample shell script:

export PROD_BUILD=$PROD_BUILD

Third, here’s your sample Docker Compose file:

# docker-compose.yaml

services:

build-frontend:

entrypoint:

- bash

- -c

- "[[ \"$PROD_BUILD\" == \"true\" ]] && make fe-prod || make fe-dev"

Note: Don’t forget to add your preferred image under build-frontend if you’re aiming to make a fully functional Docker Compose file.

What if we need to troubleshoot any issues that arise? Debugging inside a running container only requires the appropriate debugging library in the mounted source code and an open port to mount the debugger. Here’s our YAML file:

# docker-compose.yaml

services:

webserver:

ports:

- '5678:5678'

links:

- mysql

- redis

entrypoint:

- bash

- -c

- ./start-webserver.sh

Note: Like in our previous examples, don’t forget to specify an image underneath webserver when creating a functional Docker Compose file.

In your editor of choice, provide a launch configuration to attach the debugger using the specified port. Once the container is running, run the configuration and the debugger will be attached:

#launch-setting.json

{

"configurations" : [

{

"name": "Python: Remote Attach",

"type": "python",

"request": "attach",

"port": 5678,

"host": "localhost",

"pathMappings": [

{

"localRoot": "${workspaceFolder}",

"remoteRoot": "."

}

]

}

]

}

Confirming that everything works

Once the full stack is running, it’s easy to access the main entry point web server via a browser on the defined webserver port.

The docker ps command will show your running containers. Docker is managing communication between containers.

The entire software service is now running completely in Docker. All the code lives on the host computer inside the Docker container. The development environment is now completely portable using only Docker.

Remembering important tradeoffs

This approach has some limitations. First, running your developer environment in Docker will incur additional resource overhead. Docker has to run and requires extra computing resources as a result. Also, including multiple stages will require scripting as a final orchestration layer to enable communication between containers.

Wrapping up

Rapid7’s development team uses Docker to quickly create their development environments. They use the Docker CLI, Docker Desktop, Docker Compose, and shell scripts to create an extremely unique and robust Docker-friendly environment. They can use this to spin up any part of their development environment.

The setup also helps Rapid7 compile frontend assets, start cache and database servers, run the backend service with different parameters, or start the entire application stack. Using a “Docker-in-Docker” approach of mounting the Docker socket within running containers makes this possible. Docker’s ability to commit layers to the base image after dependencies are either updated or installed is also key.

The shell scripts will export the required environment variables and then run specific processes in a specific order. Finally, Docker Compose makes sure that the appropriate service containers and dependencies are running.

Achieving future development goals

Relying on the Docker tool chain has been truly beneficial for Rapid7, since this has helped them create a consistent environment compatible with any part of their application stack. This integration has helped Rapid7 do the following:

- Deploy extremely reliable software to advanced customer environments

- Analyze code before merging it in development

- Deliver much more stable code

- Simplify onboarding

- Form an extremely flexible and configurable development environment

By using Docker, Rapid7 is continuously refining its processes to push past the boundaries of what’s possible. Their next goal is to deliver production-grade stable builds on a daily basis, and they’re confident that Docker can help them get there.

]]>Before today, you could only use Docker Hub to store and distribute container images — or artifacts usable by container runtimes. This became a limitation of our platform, since container image distribution is just the tip of the application delivery iceberg. Nowadays, modern application delivery requires numerous types of artifacts:

- Helm charts

- WebAssembly modules

- Docker Volumes

- SBOMs

- OPA bundles

- …and many other custom artifacts

Developers often share these with clients that need them since they add immense value to each project. And while the OCI working groups are busy releasing the latest OCI Artifact Specification, we still have to package application artifacts as OCI images in the meantime.

Docker Hub acts as an image registry and is perfectly suited for distributing application artifacts. That’s why we’ve added support for any software artifact — packaged as an OCI image — to Docker Hub.

What’s the Open Container Initiative (OCI)?

Back in 2015, we helped establish the Open Container Initiative as an open governance structure to standardize container image formats, container runtimes, and image distribution.

The OCI maintains a few core specifications. These govern the following:

- How to package filesystem bundles

- How to launch containerized, cross-platform apps

- How to make packaged content accessible to remote clients

The Runtime Specification determines how OCI images and runtimes interact. Next, the Image Specification outlines how to create OCI images. Finally, the Distribution Specification defines how to make content distribution interoperable.

The OCI’s overall aim is to boost transparency, runtime predictability, software compatibility, and distribution. We’ve since donated our own container format and runC OCI-compliant runtime to the OCI, plus given the OCI-compliant distribution project to the CNCF.

Why are we adding OCI support?

Container images are integral to supporting your containerized application builds. We know that images accumulate between projects, making centralized cloud storage essential to efficiently manage resources. Developers shouldn’t have to rely on local storage or wonder if these resources are readily accessible. However, we also know that developers want to store a variety of artifacts within Docker Hub.

Storing your artifacts in Docker Hub unlocks “anywhere access” while also enabling improved collaboration through Docker Hub’s standard sharing capabilities. This aligns us more closely with the OCI’s content distribution mission by giving users greater control over key pieces of application delivery.

How do I manage different OCI artifacts?

We recommend using dedicated tools to help manage non-container OCI artifacts, like the Helm CLI for Helm charts or the OCI Registry-as-Storage (ORAS) CLI for arbitrary content types.

Let’s walk through a few use cases to showcase OCI support in Docker Hub.

Working with Helm charts

Helm chart support was your most-requested feature, and we’ve officially added it to Docker Hub! So, how do you take advantage? We’ll create a simple Helm chart and push it to Docker Hub. This process will follow Helm’s official guide for storing Helm charts as OCI images in registries.

First, we’ll create a demo Helm chart:

$ helm create demo

This’ll generate a familiar Helm chart boilerplate of files that you can edit:

demo

├── Chart.yaml

├── charts

├── templates

│ ├── NOTES.txt

│ ├── _helpers.tpl

│ ├── deployment.yaml

│ ├── hpa.yaml

│ ├── ingress.yaml

│ ├── service.yaml

│ ├── serviceaccount.yaml

│ └── tests

│ └── test-connection.yaml

└── values.yaml

3 directories, 10 files

Once we’re done editing, we’ll need to package the Helm chart as an OCI image:

$ helm package demo

Successfully packaged chart and saved it to: /Users/martine/tmp/demo-0.1.0.tgz

Don’t forget to log into Docker Hub before pushing your Helm chart. We recommend creating a Personal Access Token (PAT) for this. You can export your PAT via an environment variable, and login, as follows:

$ echo $REG_PAT | helm registry login registry-1.docker.io -u martine --password-stdin

Pushing your Helm chart

You’re now ready to push your first Helm chart to Docker Hub! But first, make sure you have write access to your Helm chart’s destination namespace. In this example, let’s push to the docker namespace:

$ helm push demo-0.1.0.tgz oci://registry-1.docker.io/docker

Pushed: registry-1.docker.io/docker/demo:0.1.0

Digest: sha256:1e960ad1693c234b66ec1f9ddce80986cbf7159d2bb1e9a6d2c2cd6e89925e54

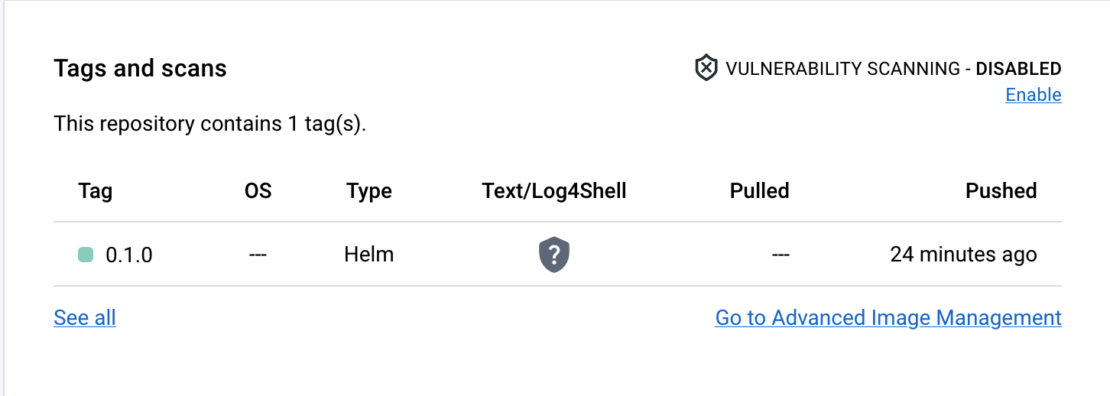

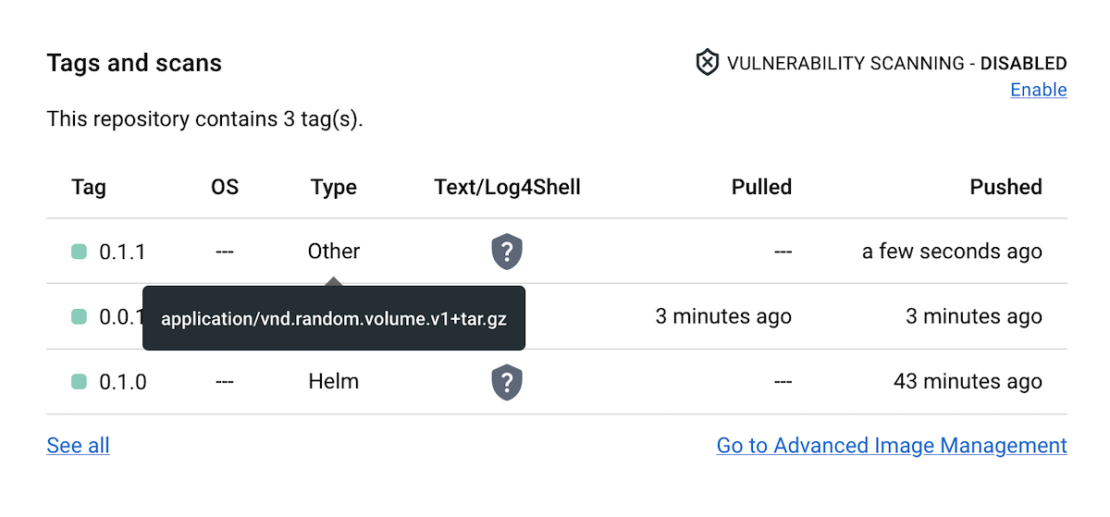

Viewing your Helm chart and using filters

Now, If you log in to Docker Hub and navigate to the demo repository detail, you’ll find your Helm chart in the list of repository tags:

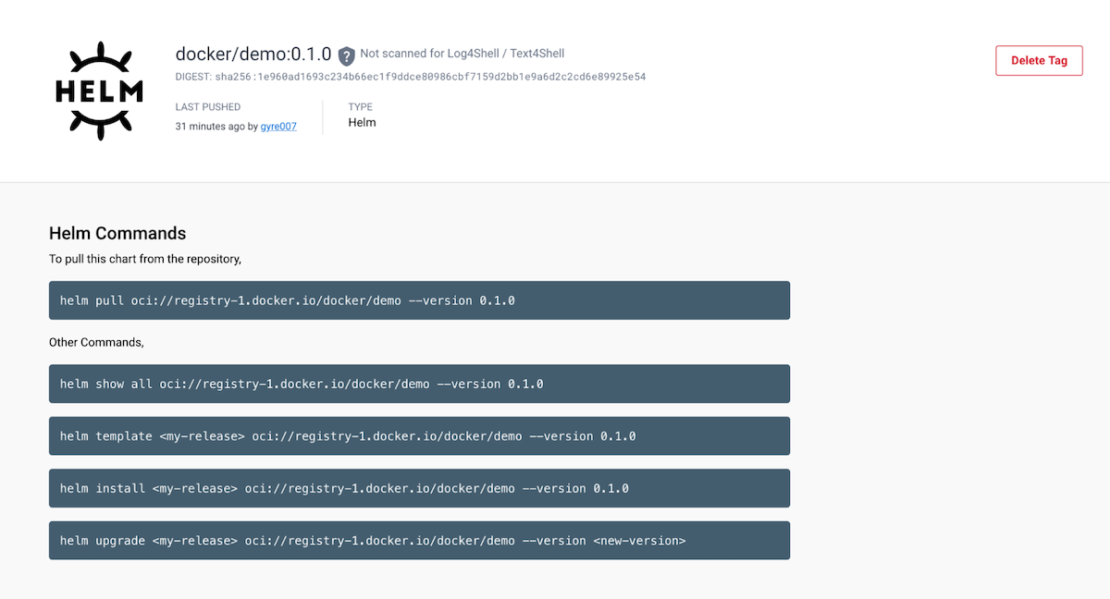

You can navigate to the Helm chart page by clicking on the tag. The page displays useful Helm CLI commands:

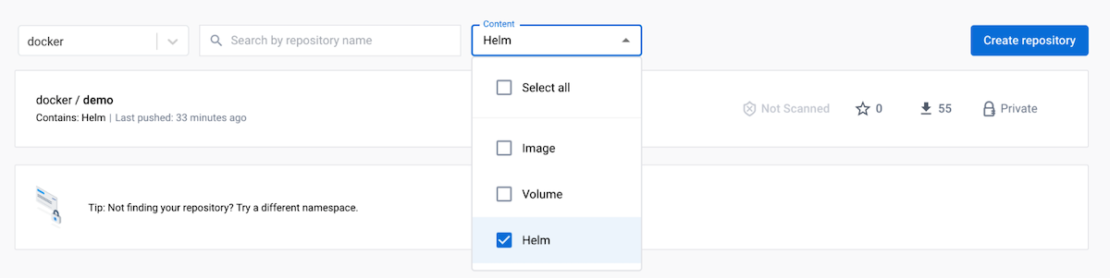

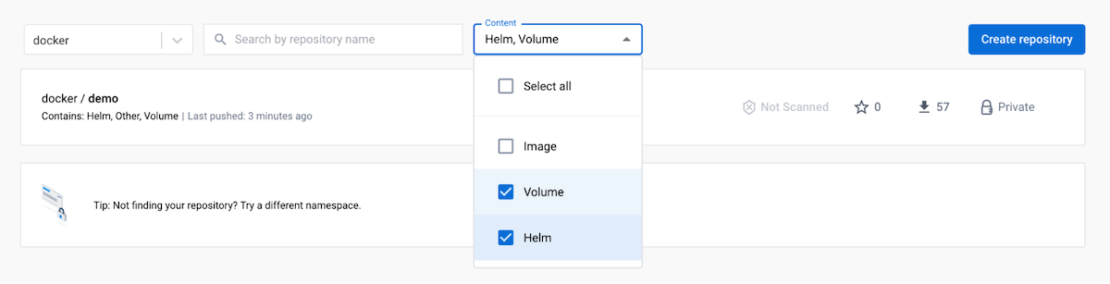

Repository content management is now easier. We’ve improved content discoverability by adding a drop-down button to quickly filter the repository list by content type. Simply click the Content drop-down and select Helm from the list:

Working with volumes

Developers use volumes throughout the Docker ecosystem to share arbitrary application data like database files. You can already back up your volumes using the Volume Backup & Share extension that we recently launched. You can now also filter repositories to find those containing volumes using the same drop-down menu.

But until Volumes Backup & Share pushes volumes as OCI artifacts instead of images (coming soon!), you can use the ORAS CLI to push volumes.

Note: We recommend ORAS CLI versions 0.15 or later since these bring full OCI registry client functionality.

Let’s walk through a simple use case that mirrors the examples documented by the ORAS CLI. First, we’ll create a simple file we want to package as a volume:

$ echo "bar" > foo.txt

For Docker Hub to recognize this volume, we must attach a config file to the OCI image upon creation and mark it with a specific media type. The file can contain arbitrary content, so let’s create one:

$ echo "{\"name\":\"foo\",\"value\":\"bar\"}" > config.json

With this step completed, you’re now ready to push your volume.

Pushing your volume

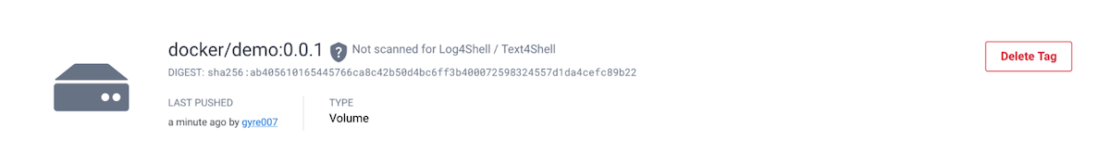

Here’s where the magic happens. The media type Docker Hub needs to successfully recognize the OCI image as a volume is application/vnd.docker.volume.v1+tar.gz. You can attach the media type to the config file and push it to Docker Hub with the following command (plus its resulting output):

$ oras push registry-1.docker.io/docker/demo:0.0.1 --config config.json:application/vnd.docker.volume.v1+tar.gz foo.txt:text/plain

Uploading b5bb9d8014a0 foo.txt

Uploaded b5bb9d8014a0 foo.txt

Pushed registry-1.docker.io/docker/demo:0.0.1

Digest: sha256:f36eddbab8459d0ad1436b7ca8af6bfc512ec74f45d8136b53c16db87562016e

We now have two types of content in the demo repository as shown in the following breakdown:

If you navigate to the content page, you’ll see some basic information that we’ll expand upon in future iterations. This will boost visibility into a volume’s contents.

Handling generic content types

If you don’t use the application/vnd.docker.volume.v1+tar.gz media type when pushing the volume with the ORAS CLI, Docker Hub will mark the artifact as generic to distinguish it from recognized content.

Let’s push the same volume but use application/vnd.random.volume.v1+tar.gz media type instead of the one known to Docker Hub:

$ oras push registry-1.docker.io/docker/demo:0.1.1 --config config.json:application/vnd.random.volume.v1+tar.gz foo.txt:text/plain

Exists 7d865e959b24 foo.txt

Pushed registry-1.docker.io/docker/demo:0.1.1

Digest: sha256:d2fb2b176ee4e326f1f34ecdaede8db742f2c444cb2c9ceff0f5c8b743281c95

You can see the new content is assigned a generic Other type. We can still view the tagged content’s media type by hovering over the type label. In this case, that’s application/vnd.random.volume.v1+tar.gz:

If you’d like to filter the repositories that contain both Helm charts and volumes, use the same drop-down menu in the top-right corner:

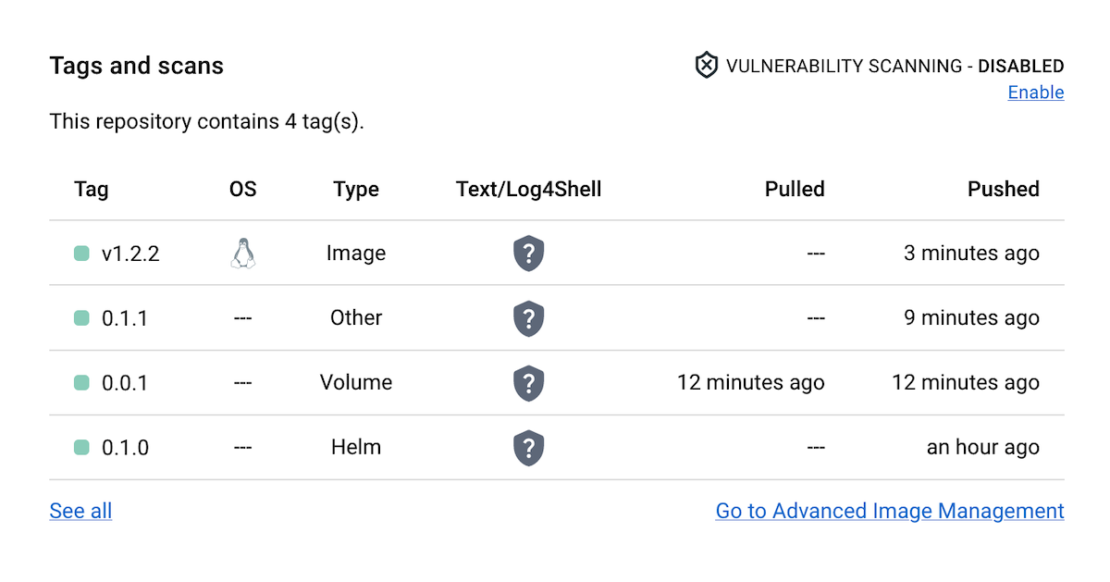

Working with container images

Finally, you can continue pushing your regular container images to the exact same repository as your other artifacts. Say we re-tag the Redis Docker Official Image and push it to Docker Hub:

$ docker tag redis:3.2-alpine docker/demo:v1.2.2

$ docker push docker/demo:v1.2.2

The push refers to repository [docker.io/docker/demo]

a1892d5d1a6d: Mounted from library/redis

e41876edb6d0: Mounted from library/redis

7119119b7542: Mounted from library/redis

169a281fff0f: Mounted from library/redis

04c8ef03e935: Mounted from library/redis

df64d3292fd6: Mounted from library/redis

v1.2.2: digest: sha256:359cfebb00bef01cda3bc1ca453e6455c770a246a06ad8df499a28118c144eda size: 1570

Viewing your container images

If you now visit the demo repository page on Docker Hub, you’ll see every artifact listed under Tags and scans:

We’ll also introduce more features soon to help you better organize your application content, so stay tuned for more announcements!

Follow along for more updates

All developers can now access and choose from more robust sets of artifacts while building and distributing applications with Docker Hub. Not only does this remove existing roadblocks, but it’ll hopefully encourage you to create and distribute even more exciting applications.

But, our mission doesn’t end here! We’re continually working to bolster our OCI support. While the OCI Artifact Specification is considered a release candidate, full Docker Hub support for OCI Reference Types and the accompanying Referrers API is on the horizon. Stay tuned for upcoming enhancements, improved repo organization, and more.

Note: The OCI artifact has now been removed from OCI image-spec. Refer to this update for more information.

]]>